BoneXpert validation study in Turkey

Researchers from Koc University Hospital in Istanbul have published a study validating two bone age systems: BoneXpert and Vuno.

292 images were rated with BoneXpert and Vuno Med bone age and compared to a reference formed by two manual ratings.

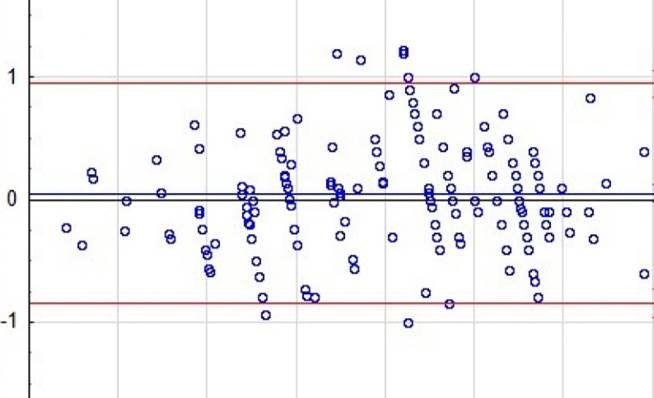

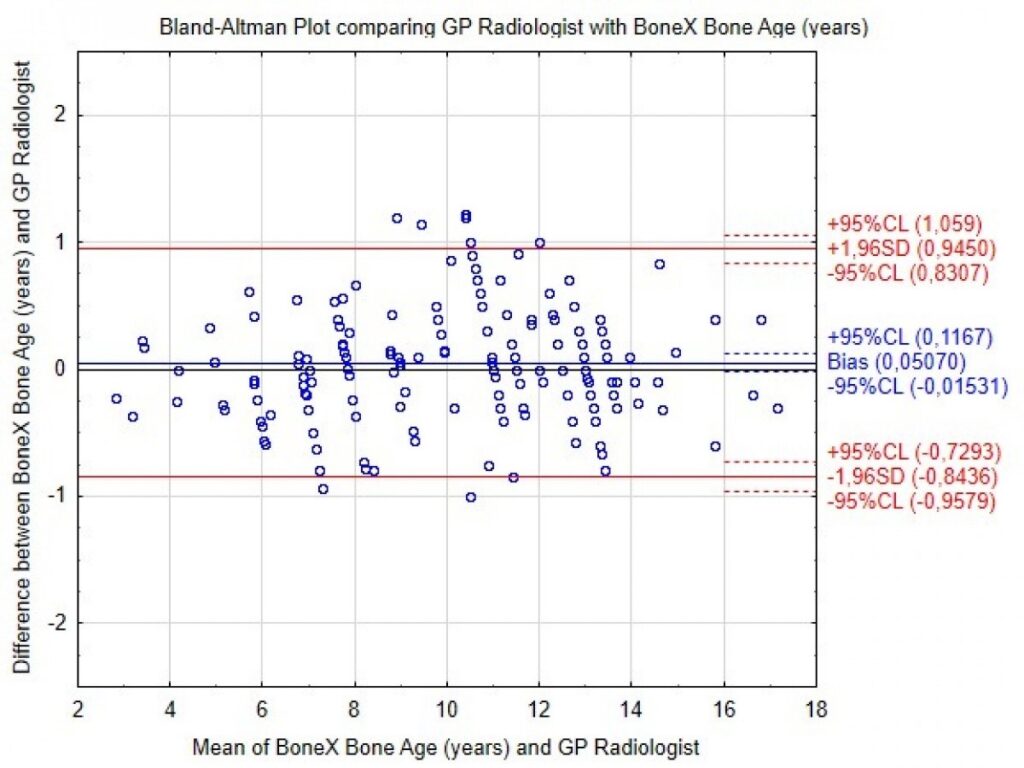

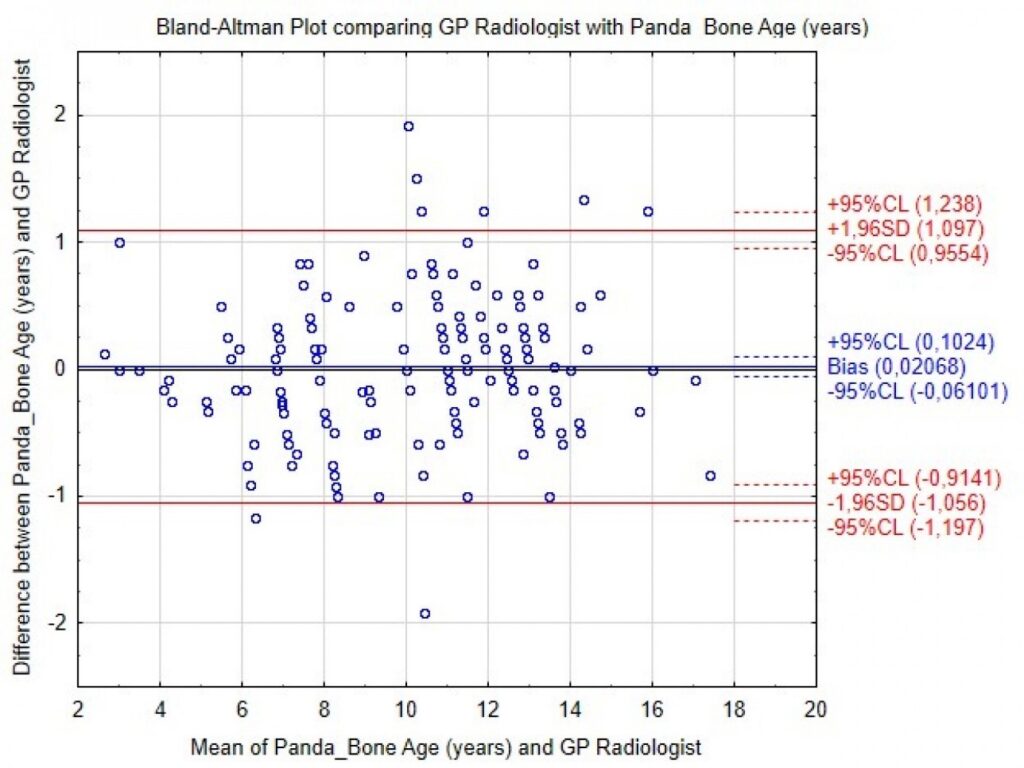

The accuracy of the two systems was found to be the same, as illustrated in the below Bland Altman plot for girls

Accuracy is one aspect of an automated method – the ability to explain the result is another, and here the two systems are very different, as the authors illustrated by juxtaposing the outputs from BoneXpert and Vuno:

BoneXpert’s main bone age result, 7.54 y, is derived as an average over the 21 tubular bones with equal weights on the bones. In addition, BoneXpert reports a carpal bone age – the average over the 7 carpals.

These results are “explained” by showing the contour of each bone as well as its bone age. Occasionally, a bone is left out due to abnormal shape, but not in this example.

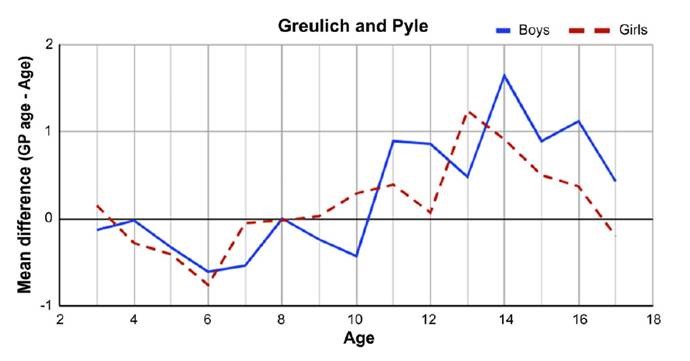

Vuno’s “explanation” is a heat map, depicting where the deep neural net output is most sensitive to changes in the image. In this example, it showed a high intensity in radius, ulna, PP2 and DP3. Since the most reliable bone age is derived by averaging over as many bones as possible, it is of concern that the method collected information from mainly these four bones. BoneXpert’s output suggested that PP2 has bone age 9.0 y, much larger than the average 7.5 y. BoneXpert assigns the same weight to all 21 bones, which tends to average out differences between the bones and this provides precision and standardisation. In a follow-up exam, the Vuno method could – at its own discretion – decide to focus on a different small subset of bones, and this would raise the question whether a change in bone age was due a biological change, or merely a result of emphasising a different subset of bones.

The full article pdf is freely available here